Tokenizing Words and Sentences with NLTK

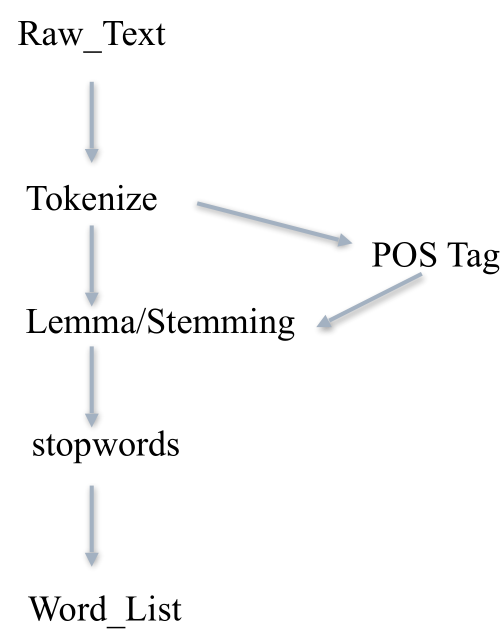

The first library that we need to download is the beautiful soup which is very useful Python utility for web scraping. Execute the following command at the command prompt to download the Beautiful Soup utility. In the script above, we first store all the English stop words from the nltk library into a stopwords variable. Next, we loop.

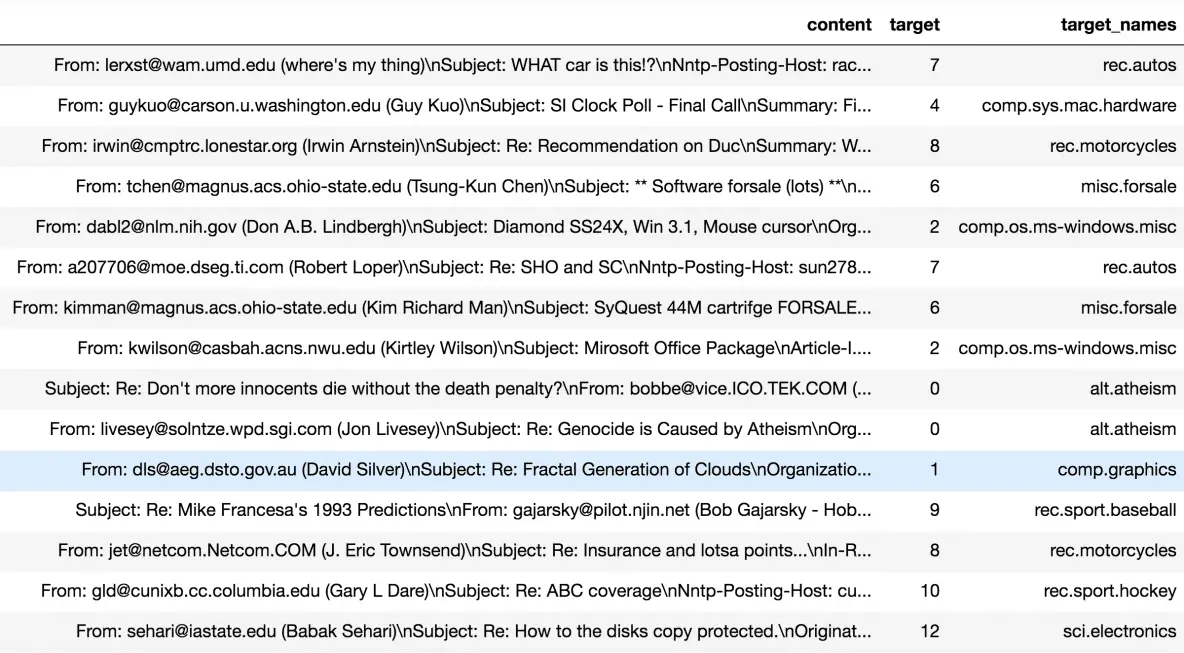

NLTK is one of the leading platforms for working with human language data and Python, the module NLTK is used for natural language processing. NLTK is literally an acronym for Natural Language Toolkit.

In this article you will learn how to tokenize data (by words and sentences).

Related course:

Easy Natural Language Processing (NLP) in Python

Install NLTK

Install NLTK with Python 2.x using:

Download Stopwords From Nltk

Install NLTK with Python 3.x using:

Installation is not complete after these commands. Open python and type:

A graphical interface will be presented:

Click all and then click download. It will download all the required packages which may take a while, the bar on the bottom shows the progress.

Tokenize words

How To Download Nltk Stopwords For Beginners

A sentence or data can be split into words using the method word_tokenize():

This will output:

All of them are words except the comma. Special characters are treated as separate tokens.

Tokenizing sentences

The same principle can be applied to sentences. Simply change the to sent_tokenize()

We have added two sentences to the variable data:

Outputs:

How To Download Nltk Stopwords Windows 7

NLTK and arrays

If you wish to you can store the words and sentences in arrays:

Since the root of the problem is bandwidth abuse, it seems a poor idea to recommend manual fetching of the entire nltk_data tree as a work-around. How about you show us how resource ids map to URLs, @alvations, so I can wget just the punkt bundle, for example?

The long-term solution, I believe, is to make it less trivial for beginning users to fetch the entire data bundle (638MB compressed, when I checked). Instead of arranging (and paying for) more bandwidth to waste on pointless downloads, stop providing 'all' as a download option; the documentation should instead show the inattentive scripter how to download the specific resource(s) they need. And in the meantime, get out of the habit of writing nltk.download('all') (or equivalent) as sample or recommended usage, on stackoverflow (I'm looking at you, @alvations) and in the downloader docstrings. (For exploring the nltk, nltk.dowload('book'), not 'all', is just as useful and much smaller.)

Download Stopwords Nltk

At present it is difficult to figure out which resource needs to be downloaded; if I install the nltk and try out nltk.pos_tag(['hello', 'friend']), there's no way to map the error message to a resource ID that I can pass to nltk.download(<resource id>). Downloading everything is the obvious work-aroundin such cases. If nltk.data.load() or nltk.data.find() can be patched to look up the resource id in such cases, I think you'll see your usage on nltk_data go down significantly over the long term.